January 21, 2024

OrionStar, a pioneering AI company, has made a splash in the tech world with the launch of Orion-14B, an open-source multilingual large language model optimized for enterprise applications. The announcement came during a keynote by Fu Sheng, Chairman of OrionStar, at the company's recent large language model release event.

Innovative Approach to AI Enterprise Solutions

Fu Sheng's keynote, "Innovating for Enterprises in the AI Trend: From Technical Enthusiasm to Enterprise Implementation - Private Large Language Models are Critical," highlighted the transformative power of Orion-14B when integrated with proprietary enterprise data. The model is designed to achieve a hundred-billion-parameter effect, significantly enhancing its capabilities in text understanding and generation across diverse contexts.

State-of-the-Art Performance

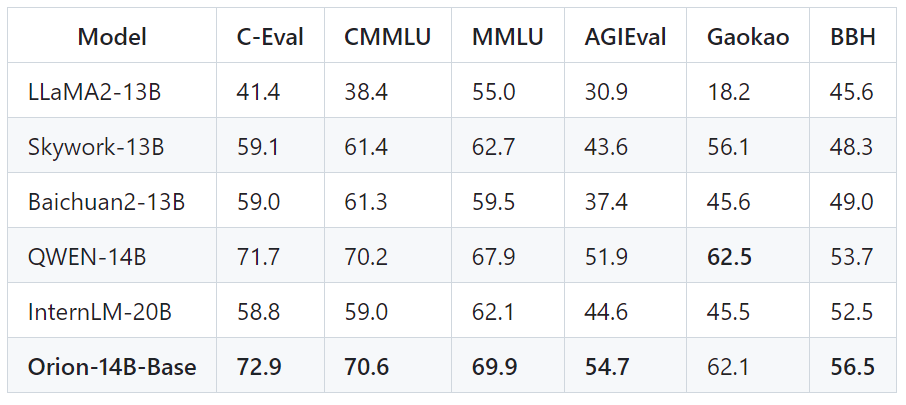

Orion-14B, boasting 14 billion parameters, has been meticulously trained on a vast dataset from OrionStarAI, totaling 2.5 trillion tokens. This dataset encompasses a wide array of languages, professional jargon, and domain-specific knowledge, ensuring the model's proficiency in various contexts.

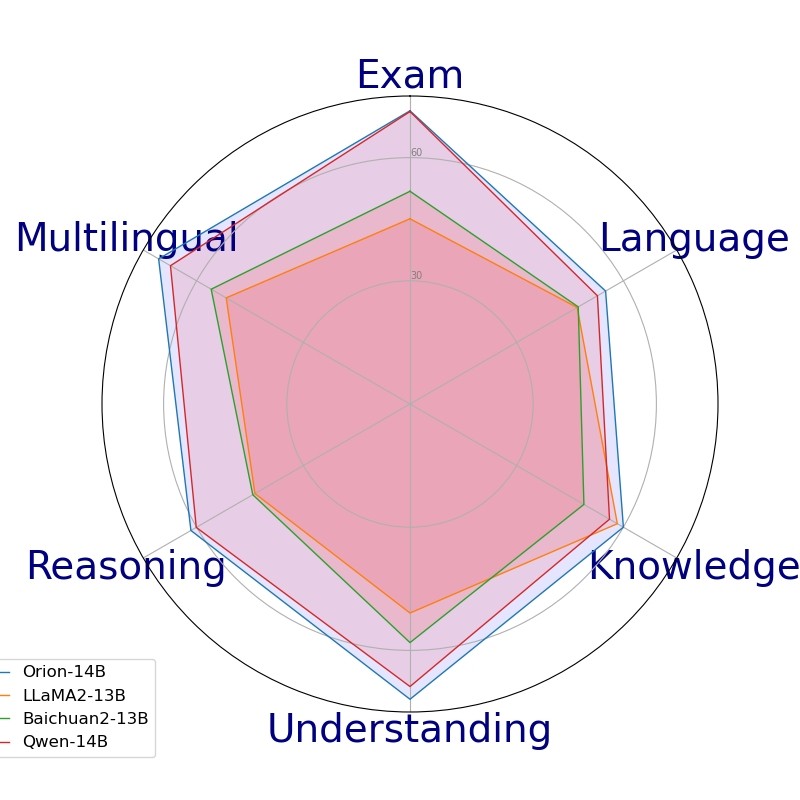

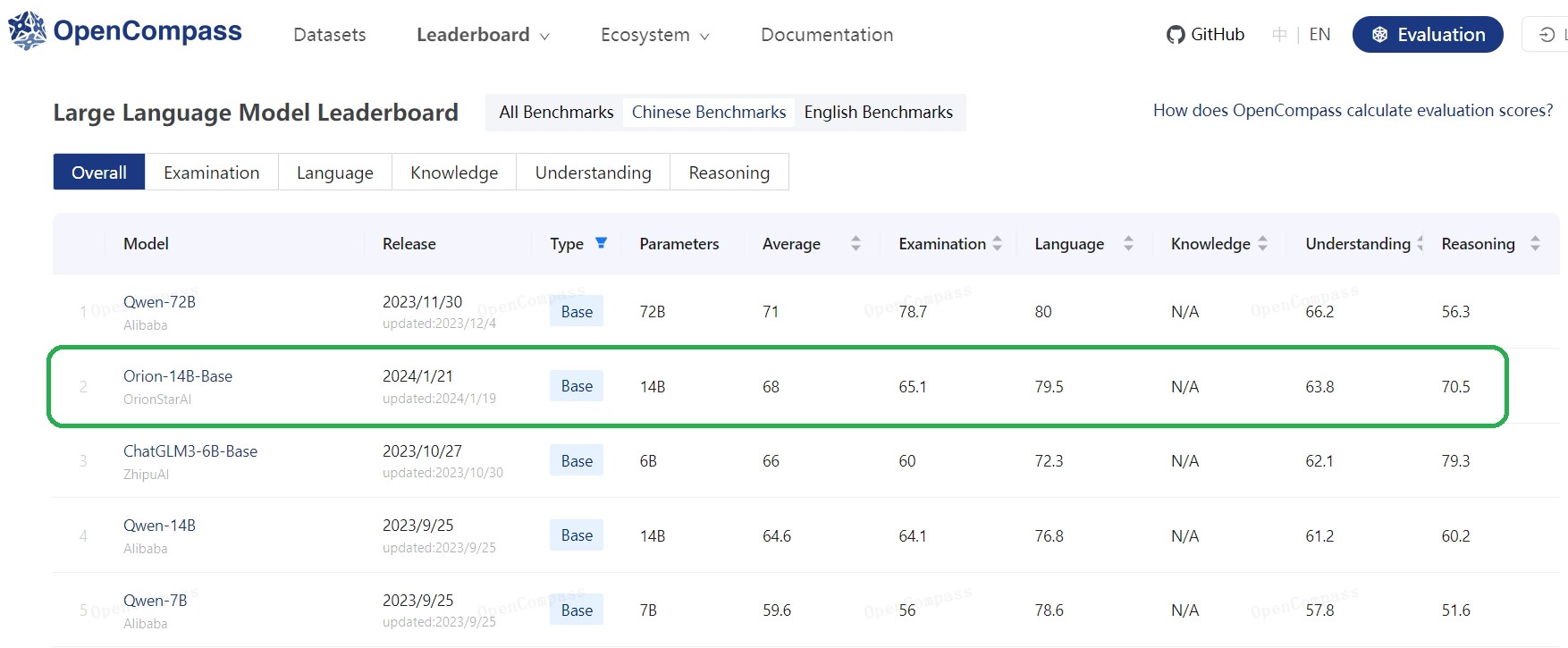

The model has set new benchmarks in performance, outperforming its peers in third-party test sets such as MMLU, C-Eval, CMMLU, GAOKAO, and BBH. In a comprehensive test by OpenCompass, Orion-14B, with its 14 billion parameters, topped the charts among Chinese language datasets for models with less than 70 billion parameters.

Efficiency and Multilingual Excellence

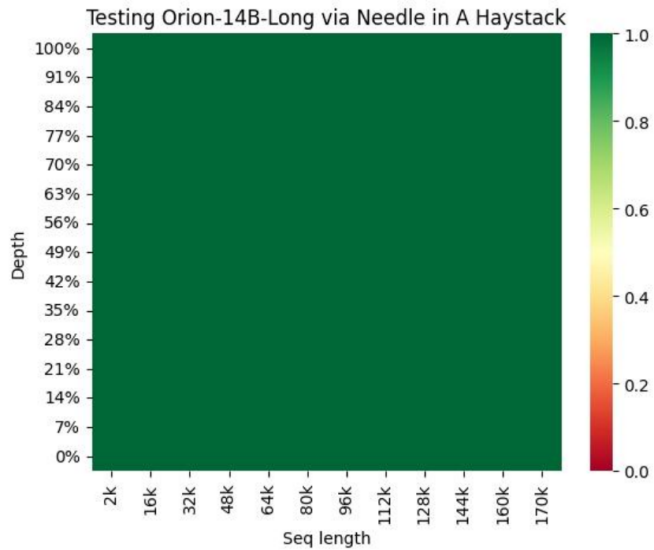

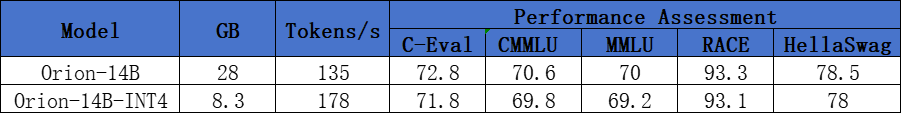

Orion-14B's ability to process extremely long texts, such as reading a novel in one go and accurately answering questions with key information embedded, is remarkable. The quantized versions of the model reduce its size by 70% and increase inference speed by 30%, with a negligible performance loss of less than 1%. It runs seamlessly on a thousand-level graphics card, achieving an inference speed of up to 31 tokens per second (approximately 50 characters per second) on an NVIDIA RTX 3060 graphics card.

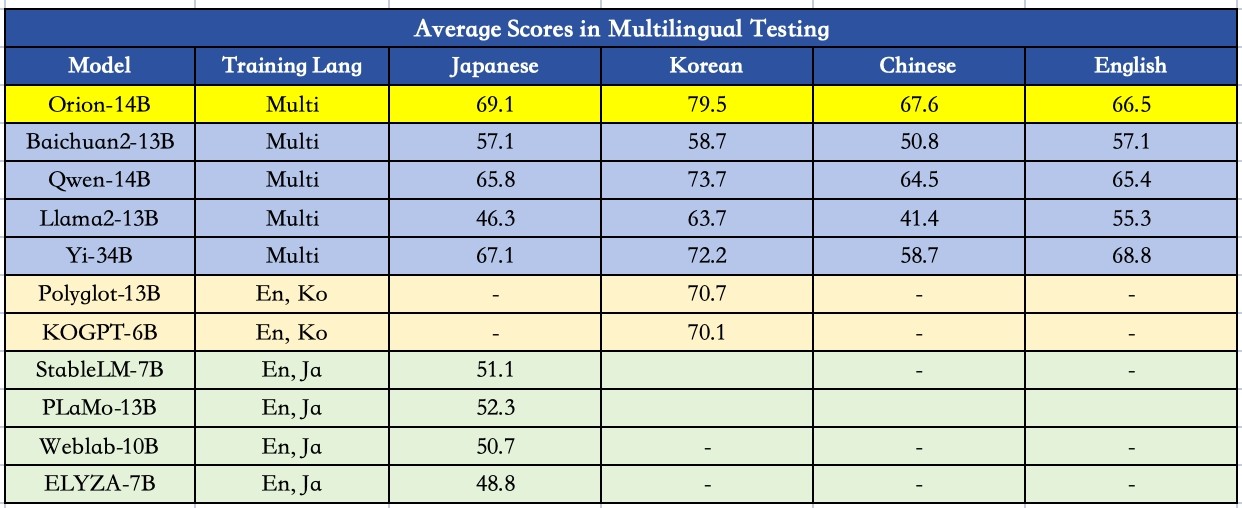

The model's multilingual prowess is equally impressive, with top rankings in Japanese and Korean language proficiency tests. It also excels in Chinese and English, as assessed by the OpenCompass language evaluation set.

Tailored for Enterprise Applications

OrionStar has introduced a suite of finely-tuned models to cater to the specific needs of enterprise applications. Orion-14B's extensive application potential rivals that of hundred-billion-parameter models, making it an ideal choice for digital employee applications.

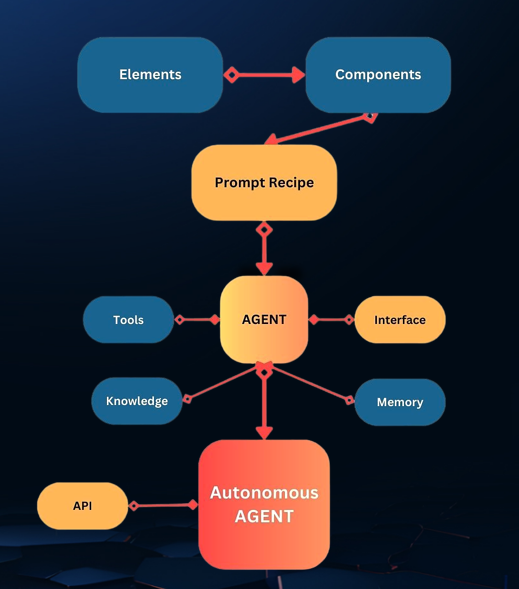

The company focuses on two key technologies: RAG (Retrieval-Augmented Generation) and Agent. Orion-14B-RAG enables rapid integration with enterprise knowledge bases and the creation of customized applications, while Orion-14B-Plugin enhances the model's ability to solve complex problems by invoking the most adaptive tools based on user queries.

OrionStar's AI Journey

Founded in 2016 with a strategic "All in AI" approach, OrionStar has been at the vanguard of AI technology development. The company has assembled a team of experts, including PhDs from leading global companies like Meta, Yahoo, and Baidu, and has accumulated billions of real user queries and trillions of tokens over seven years, providing a robust foundation for research and development.

Looking Ahead

OrionStar is currently developing a mixture of experts model based on the MoE architecture, aiming to rival the intelligence level of trillion-parameter models in all aspects.

For more information,visit

https://github.com/OrionStarAI/Orion or https://huggingface.co/OrionStarAI.